Manual approaches have become outdated, making data automation software a vital necessity for modern operations.

Data automation transforms how we handle information by automating the gathering, handling, and interpretation of data seamlessly. By eliminating manual work in tasks like data collection, refinement, and verification, companies can enhance their productivity and reduce errors, allowing their teams to concentrate on extracting meaningful business value.

Ready to revolutionize your data management? Data automation is key to staying ahead in today’s digital world. Whether you’re a small business or large corporation, let Raven Labs guide you in harnessing the power of automation. Contact us today to unlock your data’s full potential.

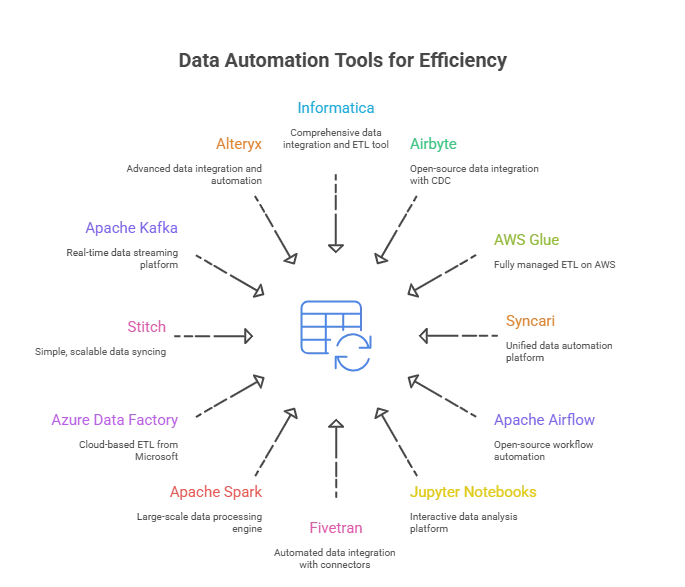

Top 12 Data Automation Software Options

Here are 12 top data automation tools that can help you simplify, scale, and optimize your data workflows.

- Informatica

- Airbyte

- AWS Glue

- Syncari

- Apache Airflow

- Jupyter Notebooks

- Fivetran

- Apache Spark

- Azure Data Factory

- Stitch

- Apache Kafka

- Alteryx

What Is Data Automation?

Data automation is the process of using technology to collect, process, transform, and analyze data with minimal or no human intervention.

By automating repetitive data management tasks such as extraction, transformation, loading (ETL), validation, and integration, businesses can significantly streamline workflows, enhance data accuracy, and accelerate data-driven decision-making.

Data automation ensures faster processing of large data volumes from diverse sources, reduces manual errors, and enables organizations to focus on extracting actionable insights rather than spending time on routine operations.

As data automation optimizes and simplifies the entire data lifecycle, it becomes a crucial strategy for businesses looking to maintain a competitive edge in the digital age.

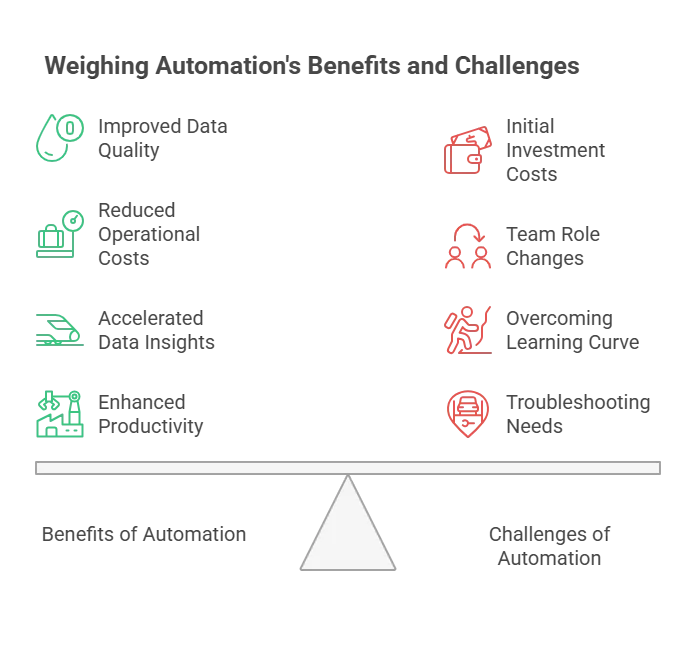

Key Benefits of Data Automation

Implementing data automation isn’t just about saving time, it’s about transforming how your business handles data. Below are the key benefits that highlight why automation is essential for modern data-driven organizations.

Improve Data Quality

Data automation tools ensure consistent, accurate, and standardized data by reducing human errors associated with manual handling. Automated data cleansing, validation, and transformation processes help maintain high data integrity. This leads to more reliable analytics, better compliance, and stronger decision-making foundations.

Reduce Operational Costs

By automating repetitive and time-consuming data processes such as extraction, transformation, loading, and cleansing organizations lower labor costs and minimize costly mistakes. Automation also reduces the need for extensive manual intervention, freeing up your workforce for more strategic, value-added activities.

Accelerate Data Insights

Automation speeds up data workflows from ingestion to analysis by enabling real-time or near-real-time processing. This accelerated pipeline allows businesses to access fresh, actionable insights faster, enhancing their agility in responding to market changes, customer needs, and operational issues.

Enhance Productivity and Scalability

Automating data processes scales easily to handle growing data volumes without proportional increases in resources or complexity. Teams boost productivity as routine tasks are streamlined or eliminated, allowing focus on innovation and analysis. Furthermore, automated orchestration supports complex workflows across hybrid and cloud environments seamlessly.

Common Challenges in Data Automation

While data automation offers significant advantages, it also comes with its own set of challenges. Understanding these hurdles can help you plan effectively and maximize the success of your automation strategy.

Manage Initial Investment and Setup Costs

Data automation often requires upfront spending on software licenses, cloud infrastructure, and customization. Careful planning, ROI analysis, and phased implementation help contain these costs.

Handle Team Role Changes

Automation shifts responsibilities, creating a need for upskilling and role adjustments within teams. Providing training and clear communication supports smooth transitions.

Overcome the Learning Curve

New tools and workflows require users to learn new systems, which can slow adoption. Prioritizing user-friendly platforms and providing onboarding resources eases this process.

Address Troubleshooting Needs

Debugging automated workflows can be complex and time-consuming. Choosing tools with built-in monitoring and diagnostics, alongside strong support, helps maintain reliability.

Types of Data Automation Tools

Data automation tools come in various forms, each tailored to specific stages of the data lifecycle. From integration to cleansing, here are the main categories and leading tools that power efficient, scalable data operations.

ETL Tools for Data Integration

ETL (Extract, Transform, Load) tools automate the movement and transformation of data from various sources into centralized repositories. Leading solutions like Fivetran, Informatica PowerCenter, Talend, Matillion, and Hevo Data offer prebuilt connectors, automated schema management, support for cloud and on-premises sources, and low-code or no-code development environments, streamlining data integration for analytics and business intelligence.

Data Pipeline Tools for Seamless Processing

Data pipeline tools facilitate the continuous and automated flow of data across systems. Notable options such as Apache Kafka, Apache NiFi, Hevo Data, Stitch, and AWS Glue enable real-time and batch processing, providing capabilities for high-throughput, fault tolerance, visual pipeline creation, and robust data lineage tracking. These tools are critical for managing modern, complex, and large-scale data workflows.

Workflow Automation Platforms for Task Orchestration

Workflow automation and orchestration platforms coordinate sequences of data and business tasks, often visualizing, scheduling, and monitoring their execution. Platforms like Apache Airflow, Prefect, Google Workflows, AWS Step Functions, and Orkes allow organizations to define dependencies, automate complex logic, and ensure reliable task execution across hybrid environments, making them foundational for modern data operations.

Data Quality and Cleansing Tools for Reliable Data

Ensuring accurate and clean data, these tools automate profiling, validation, error correction, and enrichment. Solutions such as Mammoth Analytics, CleanSwift Pro, DataPure AI, OpenRefine, Astera Centerprise, and Trifacta Wrangler excel with automated anomaly detection, AI-assisted cleaning, collaboration features, and quick integration with data sources. They empower organizations to maintain high-quality, trustworthy data sets ready for advanced analytics and reporting.

Top 12 Data Automation Software Options

Here is the list of 12 powerful data automation tools that help teams improve efficiency, reduce manual work, and unlock real-time insights at scale.

Informatica

A comprehensive data integration tool for managing and automating complex data flows and ETL tasks. Informatica offers robust data orchestration, powerful data quality management, metadata solutions, and supports workflow automation for enterprise data management scenarios. It’s highly regarded for data governance, regulatory compliance, and enabling efficient cross-platform data integration.

Airbyte

Open-source and cloud-native data integration and pipeline software. Airbyte automates real-time data syncs from hundreds of sources with easy-change data capture (CDC), custom connector support, and seamless integration with data transformation tools like dbt. Its intuitive UI and ELT automation also make it a favored choice for scalable data orchestration and cloud data automation solutions.

AWS Glue

A fully managed ETL automation tool and data pipeline software on AWS, ideal for big data automation platforms. AWS Glue automates the discovery, cleaning, transformation, and movement of data across a multi-cloud landscape using visual development features, automated schema management, and machine learning-enhanced data cleansing.

Syncari

Unified data automation platform designed to automate data integration, transformation, deduplication, and unification across all systems in near real-time. Syncari leverages AI-powered automations for workflow orchestration, data enrichment, and robust data quality projects, centralizing governance for customer and operational data.

Apache Airflow

Industry-standard open-source data orchestration software and workflow automation platform. Airflow enables users to author, schedule, and monitor complex ETL/ELT pipelines, manage workflows as code, and integrate with a variety of data transformation and big data processing tools.

Jupyter Notebooks

Widely used interactive platform for automating data analysis, transformation, and visualization tasks using Python or other languages. Jupyter helps automate and document data cleansing, transformation, and reporting processes ideal for data exploration, prototyping, and building repeatable data workflows.

Fivetran

Automated data integration and movement platform for building reliable, low-maintenance data pipelines. Fivetran supports schema drift, automates transformations, and handles over 700 connectors for data sync between SaaS, databases, files, and cloud warehouses as part of any modern cloud data automation solution.

Apache Spark

Spark automates batch and streaming data processing, large-scale data cleansing, and advanced transformations required for analytics and AI/ML all at scale for big data automation platforms.

Azure Data Factory

Cloud-based ETL automation tool and data orchestration software from Microsoft, automating the movement and transformation of data across hybrid and multi-cloud environments. Azure Data Factory excels at real-time and batch processing, workflow automation, and integrating with Azure’s suite of analytics, cleansing, and transformation tools.

Stitch

SaaS data pipeline software and ETL automation tool specializing in simple, scalable data syncing from many sources to cloud data warehouses. Stitch simplifies cloud data automation with a code-free interface and robust monitoring.

Apache Kafka

Leading distributed streaming platform, automating real-time data ingestion, processing, and delivery within modern data architectures. Kafka enables real-time data pipeline orchestration across big data environments, integrating seamlessly with ETL and workflow automation platforms.

Alteryx

Advanced data integration, transformation, and automation solution for analytics. Alteryx provides a powerful drag-and-drop interface for building end-to-end data workflows, integrating cleansing, enrichment, and big data automation. It excels in supporting both technical and business users by automating everything from data preparation to advanced analytics.